At Facts.net, our dedicated team is the backbone of our mission to bring you the most accurate, engaging, and diverse facts from around the globe. Each team member’s unique skills and perspectives contribute to making Facts.net a trusted and dynamic platform. Let’s meet the core team:

Editorial Team

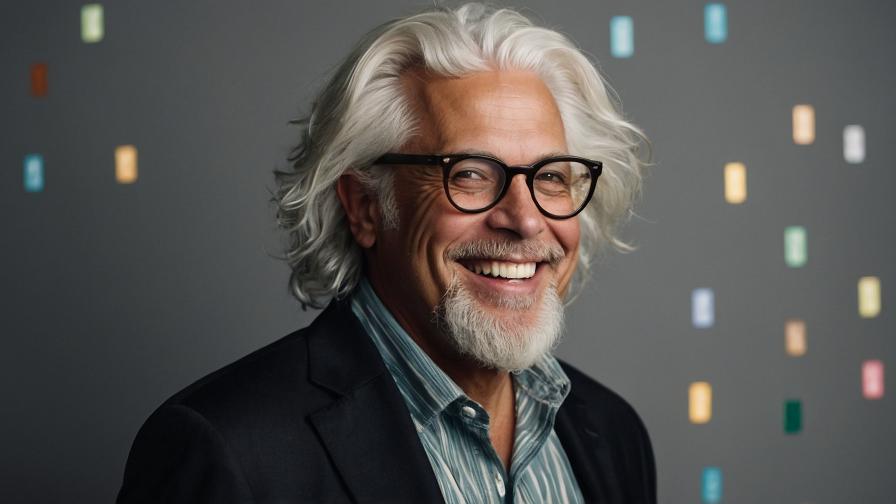

Sherman Smith, Chief Editor

Sherman brings over a decade of experience in digital publishing to oversee our content strategy and development. His commitment to factual accuracy and engaging storytelling ensures our content meets the highest standards.

Jessica Corbett, Senior Fact Checker

With a meticulous eye for detail and a Master’s degree in Library Science, Jessica leads our fact-checking efforts. Her dedication is crucial in upholding the integrity and accuracy of our content.

Kaitlin Vogel, Content Writer

Specializing in science and technology, Kaitlin has a knack for making complex topics accessible and engaging. Her well-researched articles are a testament to her passion for knowledge and clarity.

Creative Team

Nick Sullivan, Creative Director

Nick oversees the visual and interactive elements that make Facts.net a visually captivating platform. His background in graphic design and multimedia arts brings our facts to life in colorful and innovative ways.

Scott Reinhard, Graphic Designer

Scott’s creative talents shine through in the infographics and designs that accompany our facts, making complex information visually appealing and easy to understand.

Technical Team

Jackson Lewis, Chief Technology Officer

Jackson ensures that Facts.net remains cutting-edge, overseeing all technical aspects of the platform. His expertise in web development and AI keeps our site fast, secure, and user-friendly.

Rob Carroll, Web Developer

Rob plays a crucial role in developing new features and maintaining the site’s functionality. His coding expertise and innovative solutions contribute to the seamless experience of our users.

Marketing and Community Engagement

Alexis Rogers, Community Manager

Alexis is the voice of Facts.net within our community, managing our social media interactions and ensuring our readers feel valued and heard. Her efforts foster a welcoming and inclusive environment.

Product Review Team

Camryn Rabideau, Product Review Editor

This talented group of individuals works together to ensure that Facts.net remains a premier destination for those seeking factual, engaging, and diverse content. Their collective efforts make learning an enjoyable and enriching experience for our global audience.